In part I of this post we looked at how chance plays a role in determining the result of rugby matches. We saw that no matter how well we think our team is prepared, they will sometimes suffer heavy losses against sides they are evenly matched against. A sports organisation who understands this and reacts appropriately can gain an advantage. If you haven’t already read part I, it might be a good idea to go and read it first, since in this article we will assume some of the concepts discussed in there are already understood.

In this post we will expand on the concept of how chance effects rugby games by looking at missed tackles between the same two evenly matched sides and using the same 5000 Monte Carlo match simulations presented in part I.

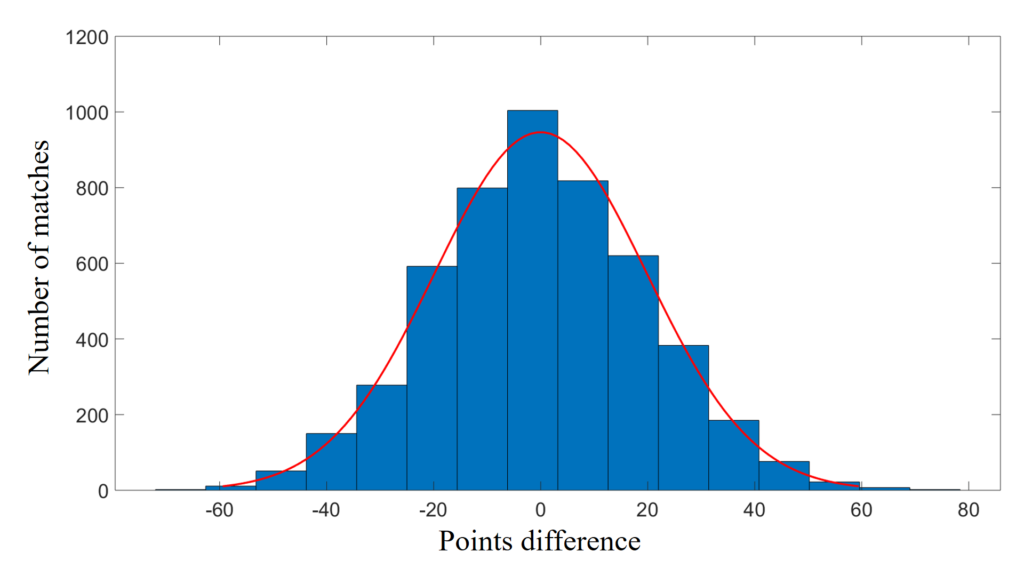

Let’s start by having a look at the missed tackle differential between the teams (home team missed tackles minus away team missed tackles), just as we looked at the points differential in the previous post.

We expect the mean missed tackle differential between two evenly matched sides to be zero. For our 5000 Monte Carlo simulations the mean was -0.1 with a standard deviation of 9.1. This mean is more than close enough to zero for our purposes, and we note that if we had carried out more simulations this value would have eventually converged in zero.

What would we expect the mean and standard deviation of the missed tackles of a real rugby competition to be, based on this result? Well we might expect the mean to be a little less than zero if the missed tackles are somehow involved in the hometeam advantage seen in part I, and the home team misses less tackles than the away team. We might also expect that the standard deviation will be larger since in a real competition not all matches will be played between such closely matched teams. This will result is a larger spread in missed tackles as the result of a larger skill differential between the sides, and hence a larger standard deviation. The mean and standard deviation of the missed tackle differential in the 2016 Super Rugby competition was -3.0 with a standard deviation of 11.2. As hypothesised, the mean is a little less than our simulations and the standard deviation larger, and this gives us some degree of confidence that things are as they should be.

Returning to the simulation results, by tallying up the simulation results we produce the table below which shows the probability of various missed tackle differentials from the perspective of the home team.

Probability of missing less and more tackles than the opposition for two evenly matched teams.| Result | Probability (%) |

|---|---|

| 15+ less missed tackles than opposition | 4.3 |

| 11 to 15 less missed tackles than opposition | 8.5 |

| 6 to 10 less missed tackles than opposition | 15.0 |

| 1 to 5 less missed tackles than opposition | 21.1 |

| Misses tackles equal to opposition | 4.5 |

| 1 to 5 more missed tackles than opposition | 20.1 |

| 6 to 10 more missed tackles than opposition | 14.3 |

| 11 to 15 more missed tackles than opposition | 7.6 |

| 15+ more missed tackles than opposition | 4.6 |

Let’s say that in a given match a team misses 6 more tackles than their opposition. Summing the bottom three rows of the table shows us they should expect to do this by chance 14.3 + 7.6 + 4.6 = 26.5 % of the time.

For arguments sake we’ll assume missing 6 or more tackles than our opposition would result in the coach berating his players for their poor defensive commitment and putting them through a defense focused training regime the following week, at the expense of time spent on other areas of training (since there is only so much training time available).

Is this an unjustified decision by the coach? Well, if the coach has no other valid reason to believe his defense has suddenly become worse, then it is absolutely an unjustified decision.

And this is where many people get into trouble with their thinking when making decisions like this. Their argument goes something like, ‘they defended poorly, therefore they need to train defense’. However, they only defended poorly in the misguided sense that it was not the result we hoped for. In reality, this is just a legitimate sample from the teams performance profile, or distribution of outcomes. No team or individual should be berated for producing expected samples from their distribution. To do so is nonsensical, just as it would be nonsensical to berate a coin for producing a head when we were hoping for a tail.

To try and understand this better let’s drill down a little further by looking at the individual performances in a single game where the home team missed 6 more tackles than their opposition. The table below shows the players who missed tackles in a single such game, and therefore contributed to the 6 more tackles missed than the opposition in this particular game.

Players who missed tackles on the home team in a single match in which the home team missed 6 more tackles than the away team| Position | Missed tackles |

|---|---|

| Prop | 3 |

| Openside flanker | 3 |

| Number eight | 3 |

| Hooker | 2 |

| Prop | 2 |

| Blindside flanker | 2 |

| Wing | 2 |

| Outside center | 2 |

| Lock | 1 |

| Halfback | 1 |

| Inside center | 1 |

| Fullback | 1 |

The table shows that 12 players contributed to a total of 23 missed tackles. The average missed tackles per game for a side in the 2016 Super Rugby contest was about 20, so this team missed 3 more than average. Because we know that they missed 6 more than their opposition in this particular game, we know that the opposition must have missed three less tackles than average. The worst offenders were three players who each missed three tackles each. Let’s pick on the first player listed in the table, the prop who missed three tackles, by examining his performances a little more closely. We’ll do this by looking at the his missed tackle performance profile as represented by the probabilities in the table below calculated over the 5000 Monte Carlo simulations for which we have data on him.

Single game missed tackle probabilities calculated from 5000 Monte Carlo match simulations for the prop (jersey number 1) on the home team.| Number of missed tackles | Probability (%) |

|---|---|

| 0 | 35.0 |

| 1 | 35.3 |

| 2 | 18.9 |

| 3 | 7.1 |

| 4+ | 3.6 |

The table shows us that our prop is normally a pretty reliable performer missing 0 or 1 tackles in more than 70% of the matches he plays. He will miss 3 or more tackles 7.1 + 3.6 = 10.7 % of the time. So whilst the 3 tackles he missed in the particular match above are not his usual performance, they still constitute expected results from his performance distribution. Therefore, unless we have other any valid reasons to believe he might be getting worse at tackling we should probably just except that this is the result of chance. Approaching him when there is no valid reason to do so may put unnecessary strain on the player coach relationship. If he missed say 4 or more tackles in consecutive games we might be more justified in taking some action since this would only be expected to happen 0.1 % of the time (0.036 x 0.036 x 100).

As a side note it will generally be better to monitor the proportion of successful skill executions. In the case of missed tackles this would be the number of missed tackles divided by the total tackles attempted. But that is a story for another day, and does not change the point we are trying to illustrate here.

So what should you do if you want to improve your teams tackling? Obviously you should train tackling. All we are saying here is that you should not make the decision to prioritise this training based on a flawed reactive understanding of probability. We’ve all seen teams who do this. One week they need to fix their defense. The next their defense is fixed but their handling needs work. Then they aren’t fit enough. Then the defense they miraculously fixed a few weeks back, has somehow broken again, and the cycle continues. This headless chook approach to coaching is a pretty good sign of a coach with no understanding of probability. Don’t be surprised to see their team sitting closer to the bottom of the ladder.

A team that can avoid this approach, will have a better chance of getting their training priorities right just by virtue of the fact they will be less likely to overcommit training time to certain areas, and as a result neglect others. In the long term they will gain advantage over other teams employing the headless chook approach.

Just what training priorities should be is another question all together. Naturally we should focus on those things that contribute the most to winning. But what are those? How do they differ for different teams? Those are questions we will look to answer in a future article.

Finally bare in mind that although this article has used tackling as an example, the general principles obviously apply to anything we might consider training. Bare in mind also that training players in a skill will result in their distribution or performance profile for that skill changing. This then becomes the new standard which they should be evaluated against when determining whether or not their is any reason to be concerned about their recent performances.